Drawing Computer Vision analyses in Field (Contours, Lines and OpenPose)

One of the analyses that the standard analysis package offers is contour extraction. For example:

python3 process.py --video /Users/marc/Downloads/IMG_2638.MOV --output_dir out2 --do_background --compute_contours --do_flow --do_lines --do_circles > contours.jsonWill produce a file called contours.json in the current directory.

Field can load these contour files and you can use them to form the basis of various abstractions. On this page we’ll build up one such ‘video / vector’ hybrid. It’s predicated on you reading introductory documentation for Field, the documentation for basic drawing and for “the Stage”. This page is given ‘cookbook style’ — lumps of code for you to tease apart, for the theory read the aformentioned pages first!

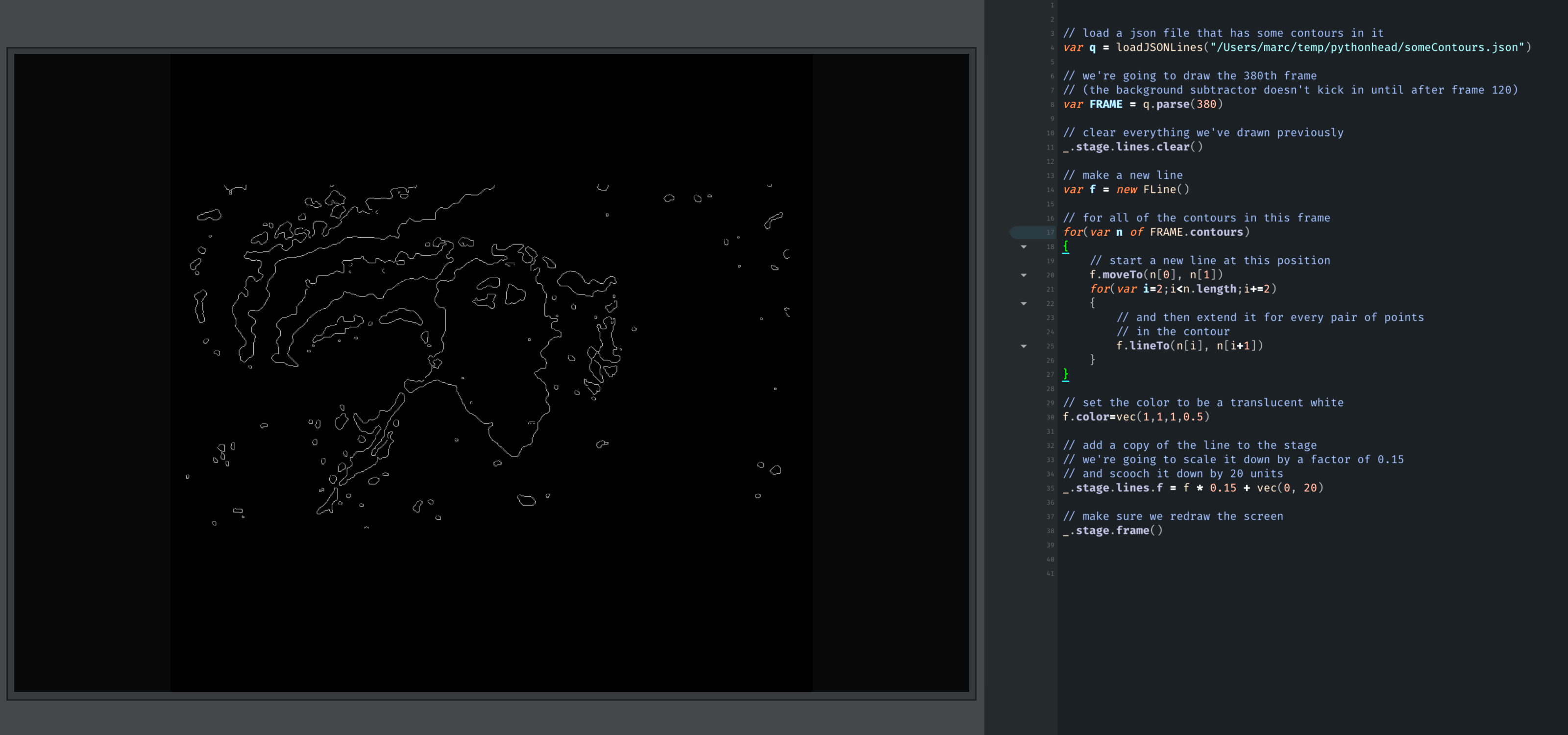

1. Checking to see that its loaded!

First, let’s just get something on the screen. Let’s run this code in a Field document with a Stage in it

// load a json file that has some contours in it

var q = loadJSONLines("/Users/marc/temp/pythonhead/contours.json")

// we're going to draw the 380th frame

// (the background subtractor doesn't kick in until after frame 120)

var FRAME = q.parse(380)

// clear everything we've drawn previously

_.stage.lines.clear()

// make a new line

var f = new FLine()

// for all of the contours in this frame

for(var n of FRAME.contours)

{

// start a new line at this position

f.moveTo(n[0][0], n[0][1])

for(var i=0;i<n.length;i++)

{

// and then extend it for every pair of points

// in the contour

f.lineTo(n[i][0], n[i][1])

}

}

// set the color to be a translucent white

f.color=vec(1,1,1,0.5)

// add a copy of the line to the stage

// we're going to scale it down by a factor of 0.15

// and scooch it down by 20 units

_.stage.lines.f = f * 0.15 + vec(0, 20)

// make sure we redraw the screen

_.stage.frame()Running this code yields an image on the Stage:

Which is a single frame of me waving at the camera.

2. Making it move

It’s easier to see that this came from some video when it moves, so let’s make it move:

// load a json file that has some contours in it

var q = loadJSONLines("/Users/marc/temp/pythonhead/contours.json")

while (_.stage.frame())

{

// starting at frame 120 until the end of the file

for(var frame=120;frame<q.lines.length;frame++)

{

// read that line

var FRAME = q.parse(frame)

// remove everything we’ve drawn so far

_.stage.lines.clear()

// make a new line

f = new FLine()

for(var n=0;n<FRAME.contours.length;n++)

{

var contour = FRAME.contours[n]

f.moveTo(contour[0][0], contour[0][1])

for(var i=1;i<contour.length;i++)

{

f.lineTo(contour[i][0], contour[i][1])

}

}

// same as before, a white line, in the right place

f.color=vec(1,1,1,0.5)

_.stage.lines.f = f * vec(100,70) + vec(0, 15)

// wait for 0.05 seconds between frames

_.stage.frame()

}

}Running this code (with alt / option - up):

That seems like it’s working!

3. Now ‘break it’

Now that we have this working, we can step away from it towards abstraction:

// load a json file that has some contours in it

var q = loadJSONLines("/Users/marc/temp/pythonhead/contours.json")

// for-forever

while(true)

{

// for-every frame in the file (after frame 120)

for(var frame=120;frame<q.lines.length;frame++)

{

// parse the frame

var FRAME = q.parse(frame)

// *** and pull out two values from the analysis

var noisex = FRAME.mean_motion_x*4

var noisey = FRAME.mean_motion_y*4

// remove everything we've drawn so far

_.stage.lines.clear()

var f = new FLine()

for(var n of FRAME.contours)

{

for(var i=0;i<n.length;i+=4)

{

// add some random noise in the direction of motion

f.lineTo(n[i][0]+Math.random()*noisex,

n[i][1] +Math.random()*noisey)

}

}

// set the color of the line to be the average color of the scene

f.color=vec(FRAME.mean_blue/255,FRAME.mean_green/255,FRAME.mean_red/255,0.9)

f.pointed=true

f.pointSize=1

_.stage.lines.add(f * vec(100,70) + vec(0, 15))

_.stage.frame(0.05)

}

}Running this code, again, (with alt / option - up):

4. A little more video

The standard analysis package is also happy to emit a directory full of jpgs representing the original video and alternative versions of it. Let’s use those directories to texture the material we have here, allowing us to drag video around like paint.

var q = loadJSONLines("/Users/marc/temp/pythonhead/contours.json")

// make a video textured layer from the directory

// given by the processor

var ll = _.stage.withImageSequence("/Users/marc/temp/pythonhead/out2/input/")

// with a little bit of motion blur

_.stage.background.a = 0.3

while(true)

{

for(var frame=120;frame<q.lines.length;frame++)

{

// animate the video texture

ll.frame=frame

// draw the lines as before

var FRAME = q.parse(frame)

var noisex = FRAME.mean_motion_x*1.

var noisey = FRAME.mean_motion_y*1.

ll.lines.clear()

var f = new FLine()

for(var n of FRAME.contours)

{

for(var i=0;i<n.length;i+=4)

{

f.smoothTo(n[i][0]*0.1, n[i][1]*0.18)

}

}

// texture the line

ll.bakeTexture(f)

f.pointed=true

// more grit

f.pointSize=FRAME.mean_motion_px/100-3

// make it a little more red

f.color=vec(1.3,0.5,0.3,1)

// but the points tilt blue

f.pointColor = vec(0.2,0.6,1.3,0.1)

f = f.byTransforming(x => vec(x.x+Math.random()*noisex, x.y+Math.random()*noisey,0))

// shrink it to have the correct size

ll.lines.add(f * vec(100,70) + vec(0, 15))

_.stage.frame(0.05)

}

}Gives this abstraction:

5. Edges

We can also use other aspects of the analysis. Here’s some code that pulls out information from the line detector.

var q = loadJSONLines("/Users/marc/temp/pythonhead/contours.json")

// make a video textured layer from the directory

// given by the processor

var ll = _.stage.withImageSequence("/Users/marc/temp/pythonhead/out2/input/")

// with a little bit of motion blur

_.stage.background.a = 0.3

while(true)

{

for(var frame=120;frame<q.lines.length;frame++)

{

// animate the video texture

ll.frame=frame

// draw the lines as before

var FRAME = q.parse(frame)

var noisex = FRAME.mean_motion_x*0.1

var noisey = FRAME.mean_motion_y*0.1

ll.lines.clear()

var f = new FLine()

for(var n of FRAME.lines)

{

// a line has four numbers - the x,y of the start and the x,y of the end

f.moveTo(n[0][0]*0.1, n[0][1]*0.18)

f.lineTo(n[1][0]*0.1, n[1][1]*0.18)

}

// texture the line

ll.bakeTexture(f)

f.pointed=true

// map motion to line thicknes

f.fastThicken=FRAME.mean_motion_x*FRAME.mean_motion_x/4000+1

// more grit

f.pointSize=FRAME.mean_motion_px/300

// make it a little more red

f.color=vec(1.0,0.5,0.9,1)

// but the points tilt blue

f.pointColor = vec(1.8,1.2,1.0,0.1)

f = f.byTransforming(x => vec(x.x+Math.random()*noisex, x.y+Math.random()*noisey,0))

// shrink it to have the correct size

ll.lines.add(f * vec(100,70) + vec(0, 15))

_.stage.frame(0.05)

}

}Yields:

OpenPose

OpenPose produces .json files which include skeleton points as well as landmarks for faces and hands. Recent versions of Field (22c and above) can open these directories full of .json files:

// import OpenPose reader

var OpenPose = Java.type("trace.mocap.OpenPose")

// open a directory of open pose files

// we need to pass in the resolution of the original

// movie so everything gets scaled correctly

var op = new OpenPose("/Users/marc/Documents/XC/lecture2/anastasia_1/output", 1920, 1080)

// we'll draw every frame

for(var frame of op.frames)

{

_.stage.lines.clear()

// we'll draw ever person

for(var person of frame.people)

{

var f = new FLine()

// we'll doodle through all of the points

for(var point of person.points)

{

// we'll only plot points that have non-zero

// 'confidence' which is stored in 'z'

if (point.z>0)

f.smoothTo(point.x, point.y)

}

// line in white

f.color=vec(1,1,1,1)

// added to the list of lines to draw

_.stage.lines.add(f)

}

// similarly, we'll draw the faces as well

for(var face of frame.faces)

{

var f = new FLine()

for(var point of face.points)

{

if (point.z>0)

f.smoothTo(point.x, point.y)

}

f.color=vec(1,1,1,1)

_.stage.lines.add(f)

}

// wait 1/10 of a second before continuing on

_.stage.frame(0.1)

}Yields:

OpenPose — 7 lessons

Let’s build up the code above slowly and then move into 3D. You can see the start of this here:

1 — Showing a point

var OpenPose = Java.type("trace.mocap.OpenPose")

var op = new OpenPose("/Users/marc/Documents/theron_playground/marc_1/output", 1920, 1920)

for(var frame of op.frames)

{

_.stage.lines.clear()

for(var person of frame.people)

{

var f = new FLine()

for(var point of person.points)

{

if (point.z>0)

f.lineTo(point.x, point.y)

}

f.color=vec(1,1,1,1)

_.stage.lines.add(f)

// draw point 4 (right hand)

var p = person.points[4]

if (p.z>0)

{

var f = new FLine()

f.moveTo(p.x, p.y)

f.color=vec(1,1,1,1)

f.pointed=true

f.pointSize=3

_.stage.lines.add(f)

}

}

for(var face of frame.faces)

{

var f = new FLine()

for(var point of face.points)

{

if (point.z>0)

f.smoothTo(point.x, point.y)

}

f.color=vec(1,1,1,1)

_.stage.lines.add(f)

}

_.stage.frame(0.05)

}

2 — A motion trail

var OpenPose = Java.type("trace.mocap.OpenPose")

var op = new OpenPose("/Users/marc/Documents/theron_playground/marc_1/output", 1920, 1920)

var f = new FLine()

f.color=vec(1,1,1,1)

_.stage.lines.add(f)

for(var frame of op.frames)

{

for(var person of frame.people)

{

// draw point 4 (right hand)

var p = person.points[4]

if (p.z>0)

{

f.lineTo(p.x, p.y)

}

}

_.stage.frame(0.05)

}

2b — A motion trail, but in 3D

var OpenPose = Java.type("trace.mocap.OpenPose")

var op = new OpenPose("/Users/marc/Documents/theron_playground/marc_1/output", 1920, 1920)

var f = new FLine()

f.color=vec(1,1,1,1)

var layer = _.stage.withName("asdf")

layer.is3D = true

layer.makeKeyboardCamera()

layer.vrDefaults()

layer.camera.position = vec(0.1, 0.05, -0.2)

layer.camera.target = vec(0, 0.05, 0)

_.stage.lines.clear()

layer.lines.clear()

layer.lines.f = f

for(var frame of op.frames)

{

for(var person of frame.people)

{

// draw point 4 (right hand)

var p = person.points[4]

if (p.z>0)

{

f.lineTo(p.x/1000, p.y/1000)

}

f = f + vec(0, 0, 0.01)

layer.lines.f = f

for(var person of frame.people)

{

var b = new FLine()

for(var point of person.points)

{

if (point.z>0)

b.smoothTo(point.x/1000, point.y/1000)

}

b.color=vec(1,1,1,0.3)

layer.lines.body = b

}

}

_.stage.frame(0.05)

}

3 — A motion ‘surface’

We can turn that into a surface by adding f.filled=true, but for completeness:

var OpenPose = Java.type("trace.mocap.OpenPose")

var op = new OpenPose("/Users/marc/Documents/theron_playground/marc_1/output", 1920, 1920)

var f = new FLine()

f.color=vec(1,1,1,1)

var layer = _.stage.withName("asdf")

layer.is3D = true

layer.makeKeyboardCamera()

layer.vrDefaults()

layer.camera.position = vec(0.1, 0.05, -0.2)

layer.camera.target = vec(0, 0.05, 0)

f.filled=true

_.stage.lines.clear()

layer.lines.clear()

layer.lines.f = f

for(var frame of op.frames)

{

for(var person of frame.people)

{

// draw point 4 (right hand)

var p = person.points[4]

if (p.z>0)

{

f.lineTo(p.x/1000, p.y/1000)

}

f = f + vec(0, 0, 0.001)

layer.lines.f = f

for(var person of frame.people)

{

var b = new FLine()

for(var point of person.points)

{

if (point.z>0)

b.smoothTo(point.x/1000, point.y/1000)

}

b.color=vec(1,1,1,0.3)

layer.lines.body = b

}

}

_.stage.frame(0.05)

}

3b — more distortion (rotating as well as translating)

var OpenPose = Java.type("trace.mocap.OpenPose")

var op = new OpenPose("/Users/marc/Documents/theron_playground/marc_1/output", 1920, 1920)

var f = new FLine()

f.color=vec(1,1,1,0.3)

var layer = _.stage.withName("asdf")

layer.is3D = true

layer.makeKeyboardCamera()

layer.vrDefaults()

layer.camera.position = vec(0.1, 0.05, -0.2)

layer.camera.target = vec(0, 0.05, 0)

f.filled=true

_.stage.lines.clear()

layer.lines.clear()

layer.lines.f = f

for(var frame of op.frames)

{

for(var person of frame.people)

{

// draw point 4 (right hand)

var p = person.points[4]

if (p.z>0)

{

f.lineTo(p.x/1000, p.y/1000)

}

f = f + vec(0, 0, 0.001)

f = (f - vec(p.x/1000, p.y/1000)) * rotate3(1, vec(0,0.5, 0.5)) + vec(p.x/1000, p.y/1000)

layer.lines.f = f

for(var person of frame.people)

{

var b = new FLine()

for(var point of person.points)

{

if (point.z>0)

b.smoothTo(point.x/1000, point.y/1000)

}

b.color=vec(1,1,1,0.3)

layer.lines.body = b

}

}

_.stage.frame(0.05)

}4 — smoothing the motion

var OpenPose = Java.type("trace.mocap.OpenPose")

var op = new OpenPose("/Users/marc/Documents/theron_playground/marc_1/output", 1920, 1920)

var f = new FLine()

f.color=vec(1,1,1,0.3)

var layer = _.stage.withName("asdf")

layer.is3D = true

layer.makeKeyboardCamera()

layer.vrDefaults()

layer.camera.position = vec(0.1, 0.05, -0.2)

layer.camera.target = vec(0, 0.05, 0)

f.filled=true

_.stage.lines.clear()

layer.lines.clear()

layer.lines.f = f

var previous = null

var alpha = 0.9

for(var frame of op.frames)

{

for(var person of frame.people)

{

// draw point 4 (right hand)

var p = person.points[4]

var at = vec(p.x/1000, p.y/1000)

if (p.z>0)

{

if (previous==null)

previous = at

else

at = at * (1-alpha) + (alpha) * previous

f.lineTo(at)

}

amount = Math.min(1.0, 0.1+ previous.distance(at)*1000)

f = f + vec(0, 0, 0.001*amount)

f = (f - at) * rotate3(amount, vec(0,0.5, 0.5)) + at

layer.lines.f = f

for(var person of frame.people)

{

var b = new FLine()

for(var point of person.points)

{

if (point.z>0)

b.smoothTo(point.x/1000, point.y/1000)

}

b.color=vec(1,1,1,0.3)

layer.lines.body = b

}

previous = at

}

_.stage.frame(0.05)

}5 — with points again

var OpenPose = Java.type("trace.mocap.OpenPose")

var op = new OpenPose("/Users/marc/Documents/theron_playground/marc_1/output", 1920, 1920)

var f = new FLine()

f.color=vec(1,1,1,0.3)

var layer = _.stage.withName("asdf")

layer.is3D = true

layer.makeKeyboardCamera()

layer.vrDefaults()

layer.camera.position = vec(0.1, 0.05, -0.2)

layer.camera.target = vec(0, 0.05, 0)

layer.bindTriangleShader(_)

f.filled=true

_.stage.lines.clear()

layer.lines.clear()

layer.lines.f = f

var previous = null

var alpha = 0.5

for(var frame of op.frames)

{

for(var person of frame.people)

{

// draw point 4 (right hand)

var p = person.points[4]

var at = vec(p.x/1000, p.y/1000)

if (p.z>0)

{

if (previous==null)

previous = at

else

at = at * (1-alpha) + (alpha) * previous

f.smoothTo(at.noise(0.001))

}

amount = Math.min(1.0, 0.01+ previous.distance(at)*1000)

f = f + vec(0, 0, 0.004*amount)

f = (f - at) * rotate3(amount*0.1, vec(0,0.5, 0.5)) + at

f.pointed=true

f.last().pointSize=amount*amount*amount*1

f.pointSize=1

layer.lines.f = f

for(var person of frame.people)

{

var b = new FLine()

for(var point of person.points)

{

if (point.z>0)

b.smoothTo(point.x/1000, point.y/1000)

}

b.color=vec(1,1,1,0.3)

layer.lines.body = b

}

previous = at

}

_.stage.frame(0.05)

}