Respatializing Photographs — a Field tool

Mac Binaries & Source

For the last year OpenEnded Group have been investigating the recent advances in solving the structure from motion problem in computer vision. To that end we've curated a collection of open source projects, built them for the Mac and created several tools in Field for running reconstructions and interactively exploring the results.

This work was conducted under NEH Digital Humanties grant # 50633.

The key algorithm, and the key piece of software, is Noah Snavely's wonderful Bundler — made available here. Our mac binaries and edited sources are available here. The license for this source code is GPL.

To use Bundler effectively you'll need an implementation of SIFT. We've compiled Andrea Vedaldi's SIFT++ code — our mac binaries and source code are available here. This code is copyright 2006 The Regents of the University of California and may (only) be freely used for research purposes. The archive above gives the full license.

The final piece of software is Furukawa and Ponce's PMVS-2 — which can be used to create dense point clouds from the sparse clouds and camera fustra that Bundler produces. Our Mac Binaries and source is available here. The license for this source is GPL.

To get all of these things compiling and working, you'll need a few dependancies. The first is a mac version of Matthias Wandel's JHead utility (for examining the Exif tags of jpegs). Next you'll need a working f2c installation — available here. CMinpack and GSL and the jpeg library complete the list of compilation dependancies.

Easy binary install

Firstly, if you are just looking for OS X binaries of the above code wrapped up in a relatively simple interface (and ant script that you can call) use this download here.

Example Field document

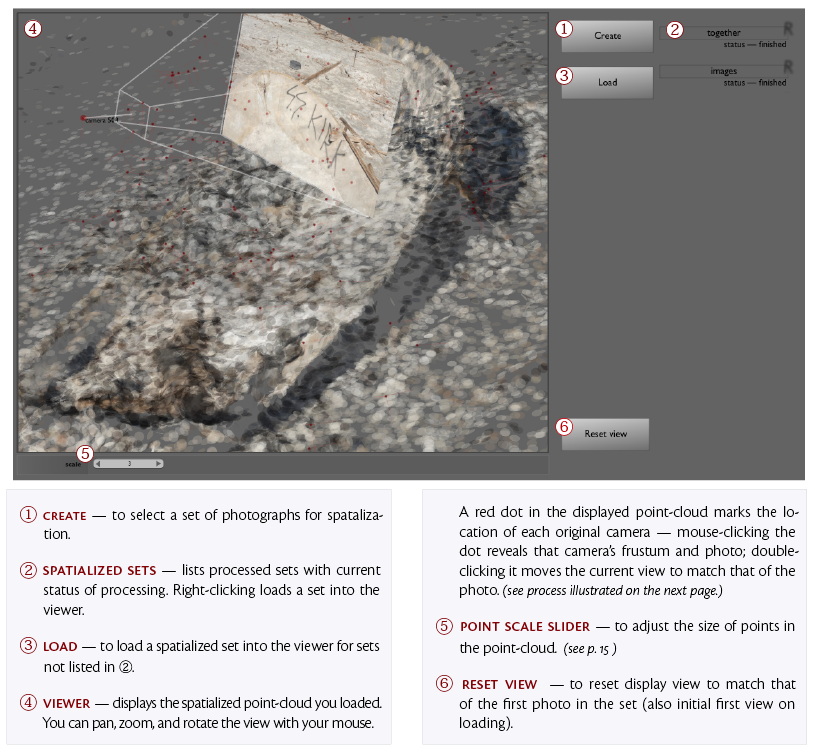

Secondly, if you are looking for a Field workbench for looking at the reconstructions made by the above code, take a look at this archive. This contains example code, a Field plugin and an example interface for a reconstruction viewer. You'll need the preview of the latest Field.

We'll be providing a standalone reconstruction interface alongside the next beta of Field.

For more information about this interface and the project in general, see our white paper.