Notes on spatial audio in Field

Recent builds of Field include support for Spatial Audio with head position governed by tracking devices. This technology stack is based on Google’s Overtone / Resonance Audio work which enables “high order ambisonic” encoding, rendering, reverberation and decoding to binaural displays. Field provides a simple interface to this engine running in a webpage.

Having inserted the template "spatial_audio2" into your document, you’ll have access to a new variable _.space. This functions a lot like _.stage, it’s available everywhere. To get the spatial audio

engine running, open a browser webpage on the usual spot: http://localhost:8090/ — the spatial audio engine is

included in our WebVR engine, but also available at this url when running Oculus / Vive.

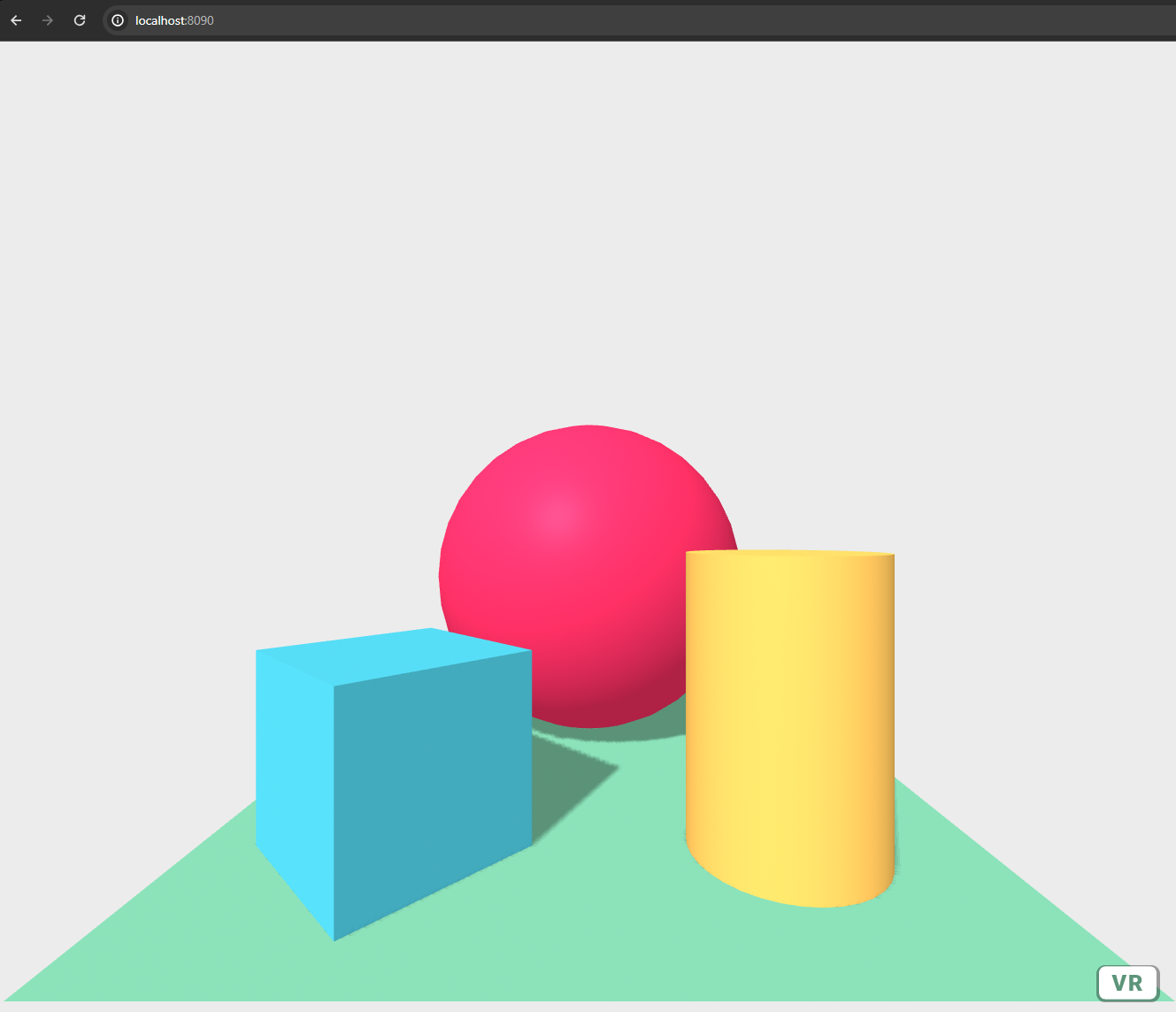

At that URL you should see a screen like this:

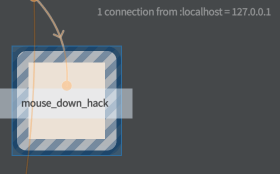

In order to make sound from a webpage "unprovoked" (as in not in respose to a mouse click), we need a little hack. First alt-click on the box "mouse_down_hack" that arrived with the template:

Then you can click somewhere in the webpage, to give permission to play audio. From that point, the ambisonic audio engine will be running!

Like stage, _.space has an idea of a named layer that

is created on demand:

var layer = _.space.withFile("/Users/marc/Documents/c3.wav")That call will open the file and start streaming it to the browser(s). Given a layer you can do the following with it:

// start at the beginning

layer.play(0)

// start 10 seconds in

layer.play(10)

// pause

layer.pause()

// continue from where you left off

layer.play()

// move the sound to position 1,2,3

layer.position(1,2,3)Additionally you can:

_.space.setRoomDimensions(4,5,6) // sets the size of the room that you are 'in'

_.space.setRoomMaterial('plywood') // makes the room made out of plywoodSee here for a list of materials.

Other extensions to Stage [ADVANCED]

Right now the only other extension to Field needed to let you build things in 3D is to constrain layers to only be visible in particular eyes. This is useful in the one case where you want to display different textures to different eyes (like in the case of the stereo pair viewer). So with code like this:

var KeyboardCamera = Java.type('field.graphics.util.KeyboardCamera')

// here are a pair of images

var layer1 = _.stage.withTexture("c:/Users/marc/Pictures/classPairs/09/left.jpg")

var layer2 = _.stage.withTexture("c:/Users/marc/Pictures/classPairs/09/right.jpg")

// you'll need to tweak this a bit

z = -50

layer1.lines.clear()

layer2.lines.clear()

// this constrains layer1 to be on the left eye and

layer1.sides=1

// layer 2 to be on the right

layer2.sides=2

// let's build a rectangle set back by '-z'

var f = new FLine()

f.moveTo(50-50*aspect, 0, -z)

f.lineTo(50+50*aspect, 0, -z)

f.lineTo(50+50*aspect, 100, -z)

f.lineTo(50-50*aspect, 100, -z)

f.lineTo(50-50*aspect, 0, -z)

layer1.bakeTexture(f)

f.filled=true

f.color = vec(1,1,1,1)

layer1.lines.f = f

// let's build another rectangle set back by '-z'

// (just in case we want it to be different)

var f = new FLine()

f.moveTo(50-50*aspect, 0, -z)

f.lineTo(50+50*aspect, 0, -z)

f.lineTo(50+50*aspect, 100, -z)

f.lineTo(50-50*aspect, 100, -z)

f.lineTo(50-50*aspect, 0, -z)

layer2.bakeTexture(f)

f.filled=true

f.color = vec(1,1,1,1)

layer2.lines.f = fYou can completely rebuild the original stereo pair viewer for Sketch 1. Of course, with Field there’s a lot more you can do, you can place those pairs anywhere you want (try one pair per box), in a timeline, with varying opacity etc.