Texture Operations

This page describes a little system, written for Field, that makes out an interesting sandbox for playing with various manipulations of pixels in realtime, targeted towards ‘Sketch2’ of our Experimental Captures class.

Getting started

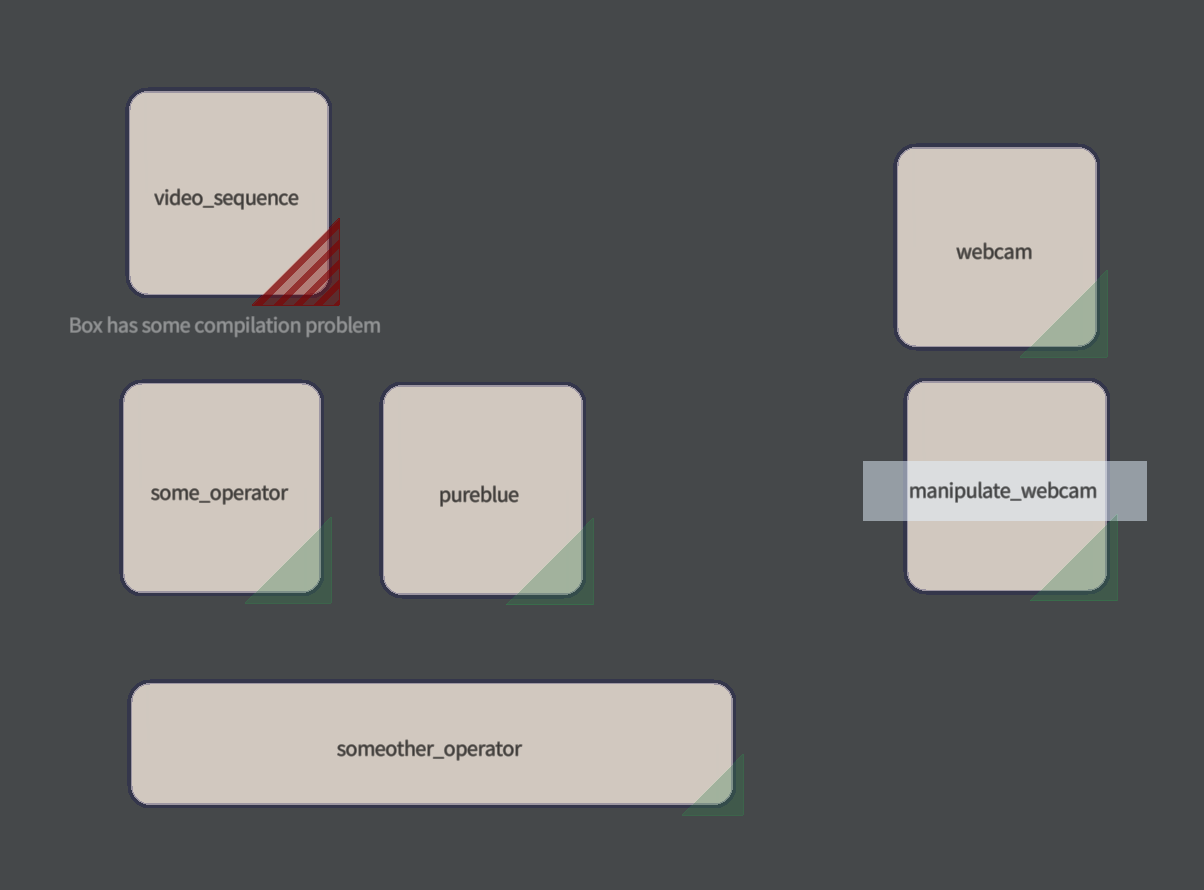

First, find yourself with a clear Field workspace and then, ctrl-space insert from workspace a template called sketch2.everything. After 10 seconds or so, you’ll see a few new boxes hanging out, and a rendering window:

These are some boxes to help you get started. The one in the top left has an error (the fancy red corner), because it’s referring to a directory full of .jpg files that almost certainly doesn’t exist.

These boxes, like all boxes in Field, contain code. Unlike most boxes in Field, this code probably isn’t very interesting to you. The interesting code is in a separate ‘tab’, and a separate programming language, because it’s running on a separate computer hiding in the corner of the computer you think you are sitting in front of — the graphics hardware. This computer isn’t very good at general tasks (like word-processing, or reading files), but it’s excellent at executing a million copies of the same program over and over again. This is exactly what we want to be able to do when we mess with pixels.

Getting to the code

To get to this code, select a box and then press ctrl-space edit fragment shader. Now you’ll see something like:

#version 410

${_._uniform_decl_}

layout(location=0) out vec4 _output;

in vec2 tc;

// -----------------------------------------------------------

void main()

{

_output = vec4(0,tc.y,1,1);

}The lines after the break (the // ------) are what you are interested in. This language is called ‘GLSL’, we’ll tutorialize this language a bit in future versions of this page. One last thing you need to know —

Running the code

After you have tweaked your GLSL code, you need to parcel it up and send it to the graphics card again. Do this with ctrl-space reload shader. Any errors in your code should be revealed then.

Seeing your code

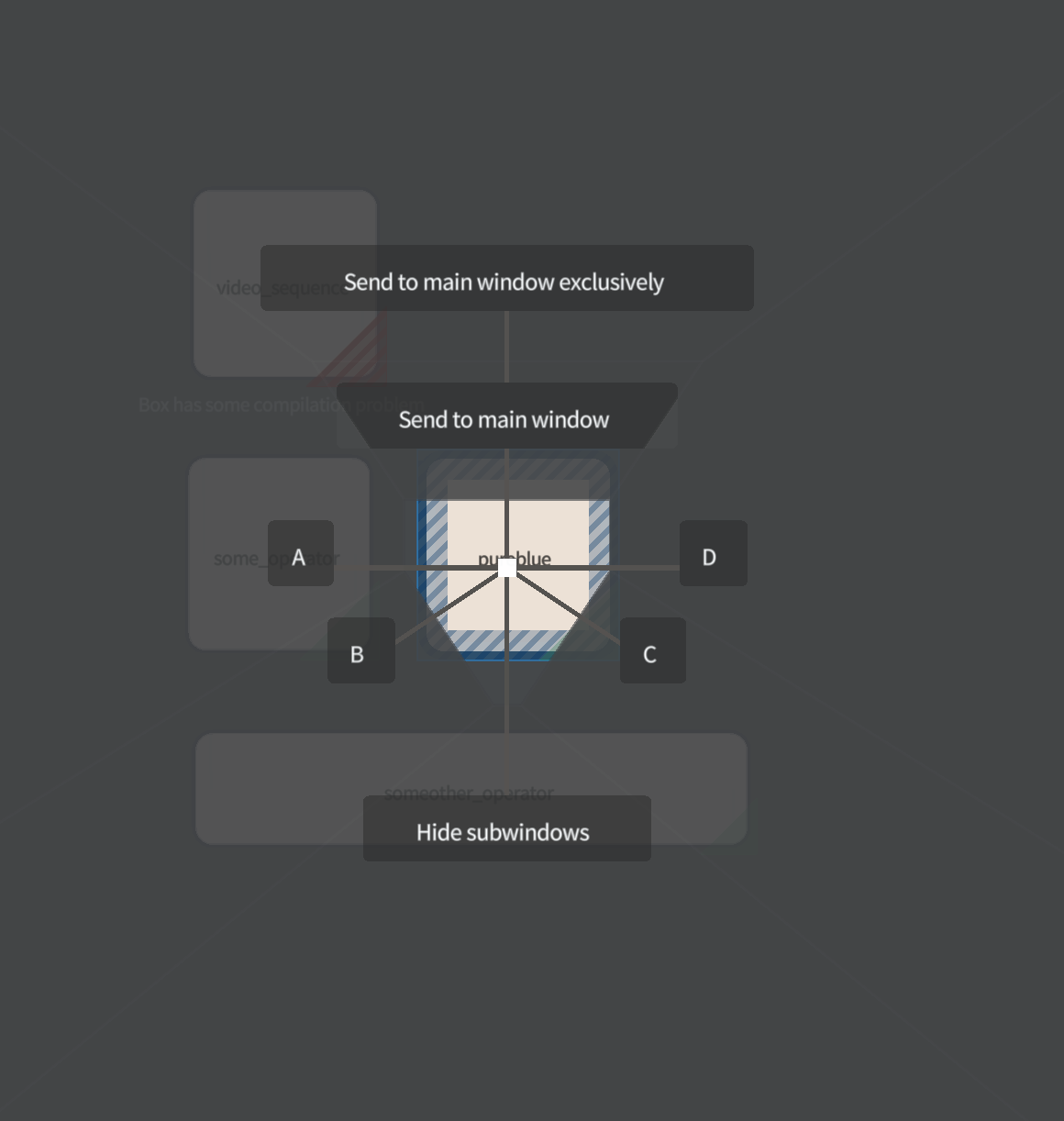

Texture Operators comes with a little ‘windowing system’ that lets you send the output of boxes to the main window. Select a box in Field and press and hold space, you should see:

Swipe the mouse and release the space bar to select something. Position’s A, B, C, D are little ‘monitors’ at the bottom of the window and the main display is everything else. This mimics the live ‘vision mixers’ of old.

What to write !?

Now that you are comfortable getting to the code, running it and seeing the results, what can you write? Let’s look at this code very closely (continue to ignore everything prior to the // --- break)

#version 410

${_._uniform_decl_}

layout(location=0) out vec4 _output;

in vec2 tc;

// -----------------------------------------------------------

void main()

{

_output = vec4(0,tc.y,1,1);

}This yields:

There’s a lot going on, let’s break it down:

void main() {}— this is where your code goes (you might recognize this from other programming languages, like C or Java)._output =— this is the variable that is going to end up having the color of the pixel that we want to draw in it. Whatever is left in_outputby the time your code finishes is the color of this pixel. If you don’t write something to_outputwho knows what you’ll get?vec4— colors in GLSL are 4-vectors. Red, Green, Blue and ‘Alpha’ (opacity). Draw something with an alpha of1and it’s completely opaque, draw something with an alpha of0and you might as well have not drawn anything. You can talk about the individual components of avec4with dot notation._output.x,_output.y,_output.zand the fourth ‘dimension’_output.w. You can also use the lettersrgba, so_output.rfor red..rand.xare the same thing (andgis the same asyandbis the same asz).tc.y— this is our first really interesting thing.tc.yis a variable that goes from 0 to 1 as we move from the bottom of the image to the top. Similarlytc.xdoes the sample thing from left (0) to right (1).(0, tc.y, 1, 1)— break this down into color channels. 0 red, green given bytc.y, maximum blue and completely opaque. You should be able to satisfy yourself that that gives a gradient from cyan (green + blue) to just pure blue.

Questions:

- How would you have the gradient go from left to right instead?

- What will happen if you set the red component to

tc.x?

Live input

Color gradients were a staple of the 90s demoscene, but what about something more interesting? If you have a camera attached to the computer that you are running on, then you’ll be able to run something like:

#version 410

${_._uniform_decl_}

layout(location=0) out vec4 _output;

in vec2 tc;

// -----------------------------------------------------------

void main()

{

_output = webcam(tc.x, tc.y);

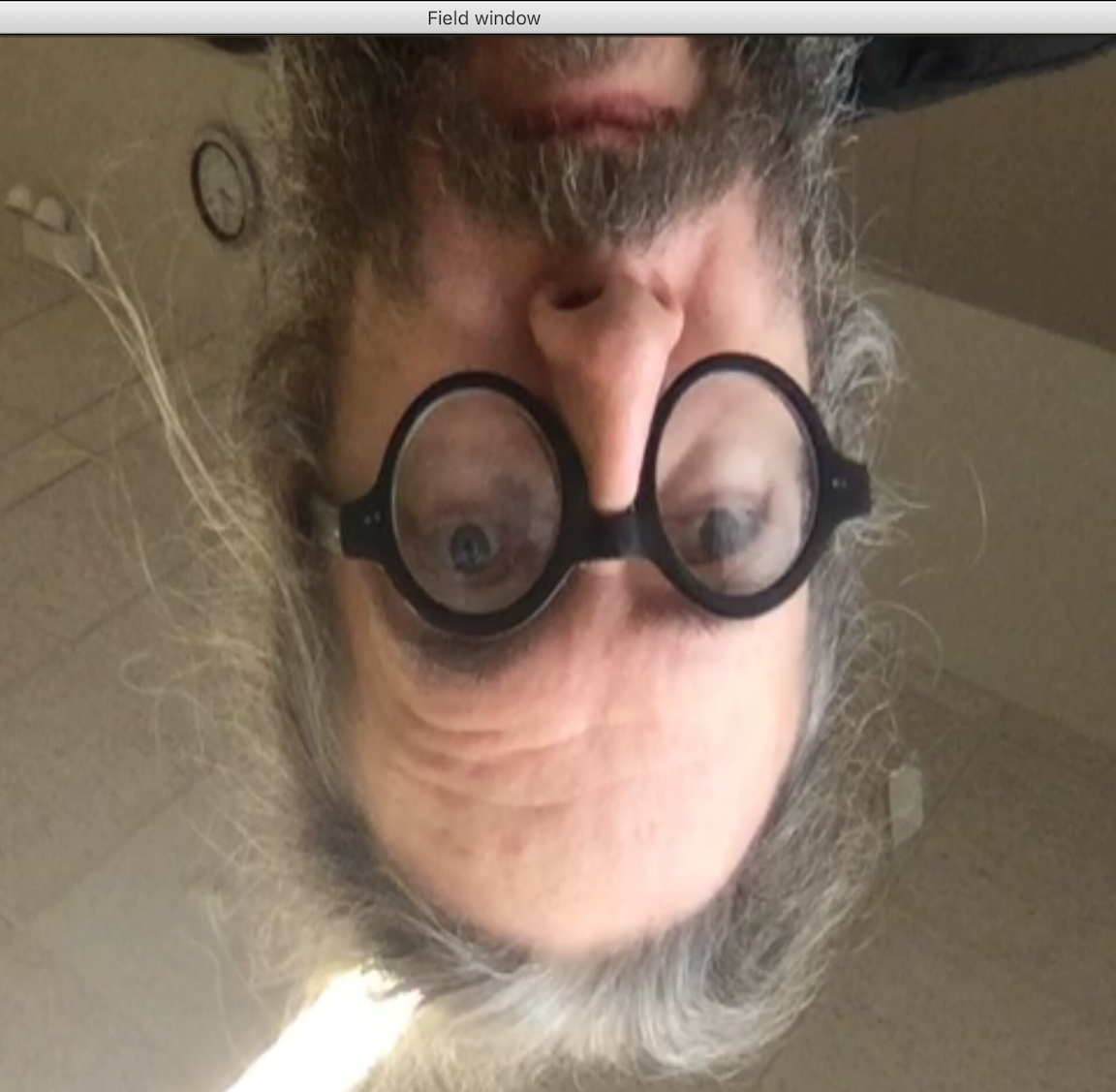

}That might give you something like:

It’s a 50/50 chance of your image being upside down. Webcameras! Now we have a pixel that has been grabbed from the webcamera at ‘coordinates’ tc.x, tc.y — that is, we’re sampling a pixel from the camera in a place that changes over time. Things to think about:

- check that sampling from

0.5, 0.5would give you the middle of your camera, stretched out to fill the window. - how would you go about flipping the image vertically? Remember,

tc.ygoes from 0 to 1 andwebcamexpects a y coordinate to go from 0 to 1 - how would you go about rotating the image?

If you are familiar with sketch 1 you can now deploy much of what you learned about a single image (there isn’t any way to access what the webcamera was doing, there is no ‘z’ coordinate here, but see below for an example of doing this with video).

Color manipulation

Playing with tc.xy is interesting, but we can also play further with _output. Consider:

_output = webcam(tc.x, tc.y);

_output.r = _output.r * 2;What do you think happens?

This should make some sense. We’ve doubled the ‘amount’ of red in our image. A color with a red component of 0 is still zero, but anything over 0.5 has as much red as possible in it.

Things to think about:

- think about how to increase the brightness of an image; what about the contrast? what about the saturation? How can you duplicate, say, the ‘levels’ in Photoshop inside GLSL?

- our last two examples have been very well “behaved” in the sense that space-y 2d things like

tccontrol where we read from and color things like_outputget manipualated on their own terms. Can you think about when a color might change space or when space might change color? What happens if red parts of the image get distorted by how much green there is there? - can you combine this with a gradient?

Animation

One last parameter completes this GLSL sandbox. u_time is a special variable that changes over time. It’s often quite large, so consider dividing it by a 1000 or something before using it. You might also want to build something that oscillates (say, (sin(u_time/1000)+1)/2) or ramps up and cuts back to zero (fract(u_time/1000)). fract is a very useful function that you might not have been introduced to in grade school, partly because it’s so simple — it returns the bit after the decimal point. fract(123.4) is simply 0.4 and fract(-23.132) is 0.132. Think of this as a way of folding the whole infinite number-line up on-top of the part from 0 to 1. It’s a great way of producing a repeating function. In electronic music terms it’s a saw-tooth wave.

With this, you can build an animation:

_output = webcam( (sin(tc.x*15+u_time/1000)+1)/2, tc.y);

- why are we adding one to

sinand dividing by 2? - why does that

sin(tc.x*15+u_time/1000)appear to move something to the left? how would you move something to the right? - if

fractis a saw-tooth wave, andsinis obviously a sin-wave, how would you go about making a triangle wave or a square wave.

Recursion

One thing that might be surprising: there’s nothing ‘special’ about that invocation of webcam in the line _output = webcam(tc) — it works because you have a box called webcam on the sheet somewhere. You can refer to the output of any other box the same way (boxes will complain if you have two that have the same name). This lets you build up little, well tested modules, and then connect them together. This is exactly the way that ‘modular’ synthesizers work (the ‘wires’ here are names and they contain video frames not sound).

Furthermore, similar to modular synths, there’s no reason why you can’t tie yourself in a (productive) knot — one box’s code can use the output of a box that’s using the same box as an input. There will be one frame of delay as the signal goes round and around, so you won’t crash anything as you glory in some old-fashioned video feedback. The only thing a box’s code can’t do is refer to the box that it’s in. That doesn’t work on contemporary graphics hardware.

Video

Finally, if you are looking for a real-time way to attack Sketch 1 look no further. All you need to know is how to load a video into this system (specifically, how to load a video onto your graphic hardware). In the tutorial there’s a box called video_sequence which contains a little amount of code to load video:

_.textureOperator = true

var TextureArray = Java.type("field.graphics.TextureArray")

var ta = TextureArray.fromDirectory(_.t_constants.nextUnit(), "x:/IMG_3774.MOV.dir/")

_.fbo = ta

_.auto = 10That “x:/IMG_3774.MOV.dir” should be the path of the video that you want to load; when you have typed that correctly (hint: the slashes go like this /), press option-up-arrow to load your video. Make sure it isn’t too big for your graphics hardware!

One delight of loading the whole video into the spectacularly fast memory on your graphics card is that all of it is available in any order. To access the video (from any other box):

_output = video_sequence(tc.x, tc.y, fract(u_time/1000)*340);video_sequence (or whatever your loader is called ) takes three parameters, not the usual two. The last one is a frame number. Numbers between frames (like, 233.8) will be crossfades between adjacent frames. The above sequence will playback 340 frames over and over again. But you can do slit scan again:

_output = video_sequence(tc.x, tc.y, tc.x*340);Note that the last paramter is in frames, not from 0-1.

“Organic” complexity

Finally, I would be remiss if I did not mention the importance of two techniques for achieving more complex patterns in time and space. The first is the judicious use of “random” numbers to add grain, glitch, noise or chaos to any configuration. The use of random numbers has a long story in art history, and we can see the reasons for this most clearly from our digital perspective. How do we get a random number on a graphics card? The short answer is that we don’t — computers are deterministic. The best we can do is to find a short expression of such unfathomable pattern that it looks like it has no structure at all.

Here’s one that many people use:

float random (vec2 st) {

return fract(sin(dot(st.xy,vec2(12.9898,78.233)))*43758.5453123);

}It’s important to note that this function takes two numbers (typically tc which has both an x and a y component) and produces one random number between 0 and 1. Let’s take a look at it:

#version 410

${_._uniform_decl_}

layout(location=0) out vec4 _output;

in vec2 tc;

float random (vec2 st) {

return fract(sin(dot(st.xy,vec2(12.9898,78.233)))*43758.5453123);

}

void main() {

vec4 c = webcam(tc.x, tc.y+random(tc));

_output = vec4(c.xyz,1);

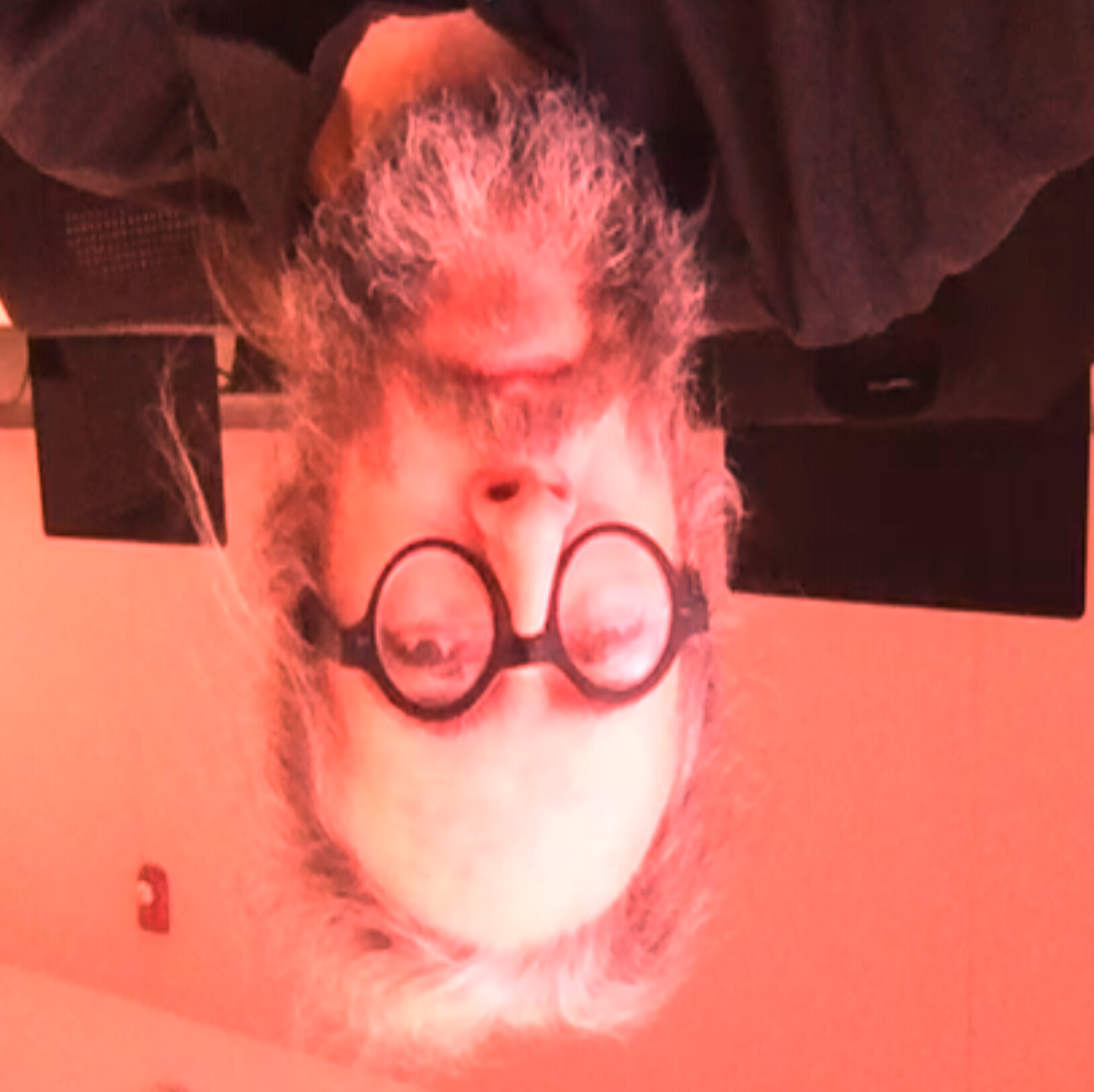

}Yields:

This doesn’t ‘animate’ (although it’s still grabbing from the webcamera, we can still see things underneath). While random gives “random” numbers, it gives the same random number for the same pixel every time. To make this move, something has to change over time. Let’s use u_time.

#version 410

${_._uniform_decl_}

layout(location=0) out vec4 _output;

in vec2 tc;

float random (vec2 st) {

return fract(sin(dot(st.xy,vec2(12.9898,78.233)))*43758.5453123);

}

void main() {

vec4 c = webcam(tc.x, tc.y+random(tc + vec2(u_time/100000,0)));

_output = vec4(c.xyz*1,1);

}That should be enough to tell you how to make a random pattern like snow from a television, tuned to a dead channel .

What to Google next

Hopefully this page has given you a taste of shader programming, and a nice place to try things out and get feedback quickly and in real-time. Shaders are the glue that holds contemporary computer graphics (and much supercomputing) together — if you want to know why a computer game looks the way it does the answer is always shaders; if you want to know how Davinci Resolve works — shaders; if you want to know why we are in the middle of an AI revolution — shaders; Pixar’s early victories and clear lead in animated film-making? they got to shaders before anybody else. And so on.

Things you might want to read, with a copy of Field open, include: Gonzalez-Vivo and Lowe’s Book of Shaders, any ShaderToy by Inigo Quilez, or, as bedtime reading for insomniacs, the GLSL language reference.