Now that we’ve got some of the fundamentals of AR under our fingers, let’s look at two dominant platforms for AR experiences through the lens of our class work. Two tools/ecosystems/approachs the first is Facebook’s emerging little tool for authoring little apps for their platforms, the second is the main event: Unity.

Facebook / Spark AR Studio

AR Studio is a programming environment for making AR experiences that deploy somewhere on Facebook’s empire (so: Facebook, Messenger and Instagram). It’s changed its name twice in the last 3 months, but appears to be called “Spark AR Studio” right now. You can download it here for free, (although it’s only available for MacOS), but it will ask for a Facebook login as soon as you open it.

It’s core strength seems to be tracked object-driven AR experiences — specifically the kinds of animated badges one might find on Instagram. There does not seem to be any translation tracking, world modeling or other kinds of image detection.

It seems to have sculptures of various sorts — including ones stuck to your face and hand — covered as long as you are happy to look at them from one angle. No signs of drawers , hypercards etc.

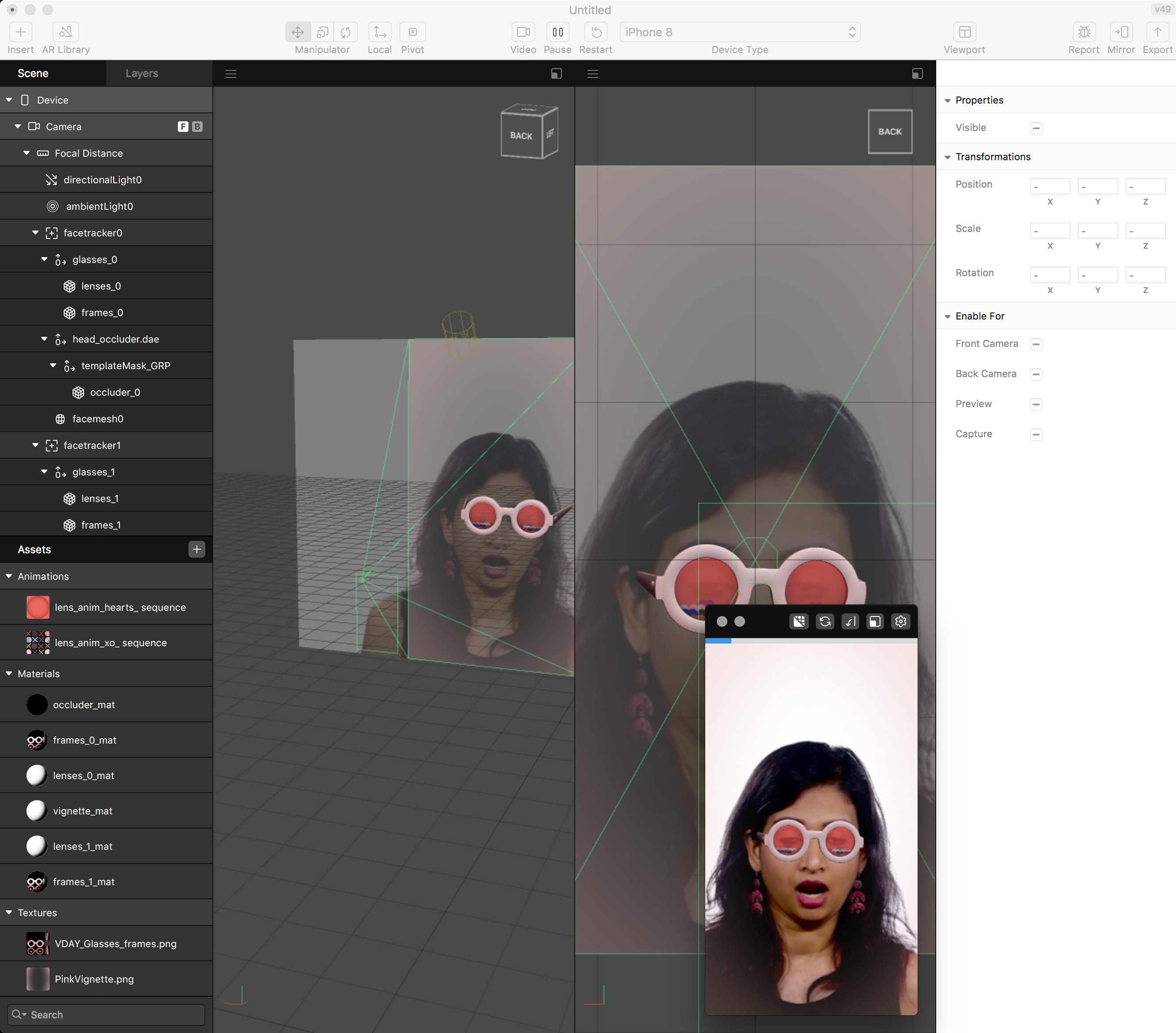

It’s default screen maps nearly one-to-one to Field’s desktop scriptable editor:

The 3D “Scene-graph” is on the top left rather than the top right. But the properties / materials / transforms of the currently selected element are on the right as usual, and there’s the option to have multiple 3d views occupy the middle. There’s a clearer separation between ‘assets’ (things you have loaded as part of your project) and the ‘scene-graph’ (things that are actually deployed and being currently drawn).

Additionally, there’s a ‘device preview’ window that’s designed to eliminate some of the turn-around time. But similar to Field on iOS there’s an accompanying mobile app called ‘Spark AR Player” that hosts test code. Unlike Field, this player represents a “stop-clear-play” button — changes in the editor cannot be sent to the phone without stopping everything starting again from the top.

The real difference, and the real interest here, is to do with the code that’s gluing things together. First of all there might not be any apparent, written or editable, code to see — more complex scene-graph objects like face-trackers (which we don’t have (yet?)) follow objects around the world and rotate, position, scale and hide their children objects automatically (just like any other node in our scene graph) — no code required! This means you can put a pair of glasses on a face without writing or seeing a single line of code.

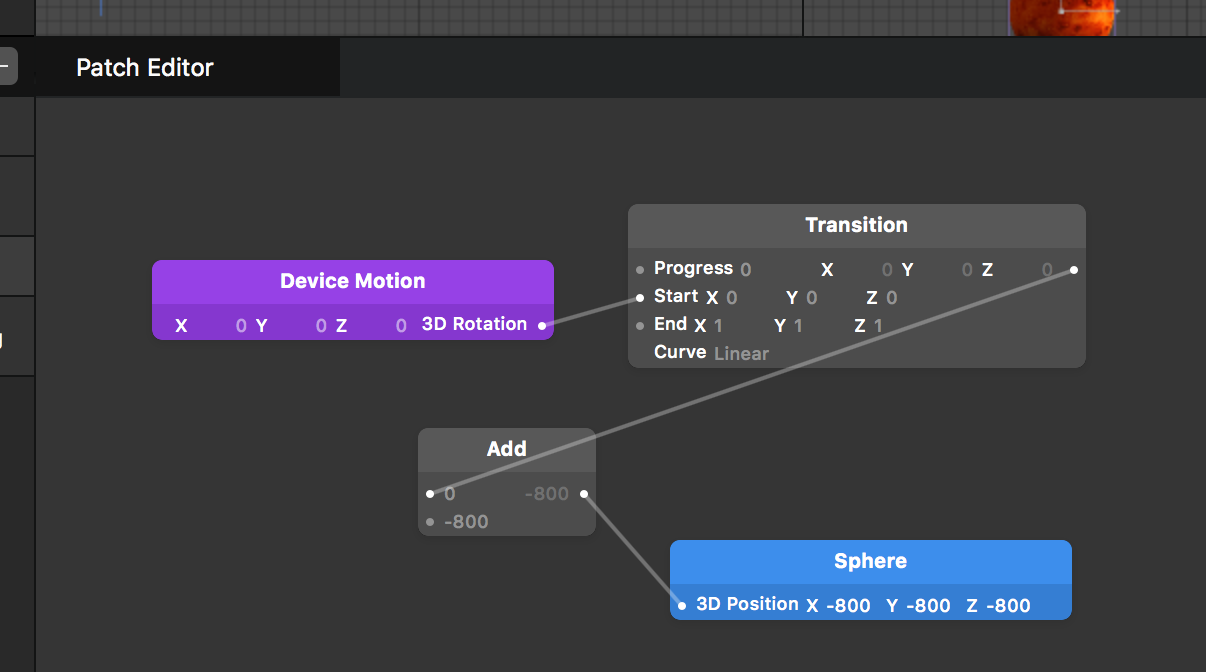

When you might think some code is required Spark AR first try to dissuade you by offering a patch editor:

This lets “code” inside a dataflow paradigm, connecting inputs to various objects (material properties, transforms) to various outputs (presence of faces, controller motion). This paradigm is common in music production tools (say, Max, Pd etc) and high end compositing software.

Finally, if you ‘add script’ inside the assets window, you’ll get the chance to actually write some code:

//Available modules include (this is not a complete list):

var Scene = require('Scene');

var Textures = require('Textures');

var Materials = require('Materials');

var FaceTracking = require('FaceTracking');

var Animation = require('Animation');

var Reactive = require('Reactive');

// Example script

// Loading required modules

var Scene = require('Scene');

var FaceTracking = require('FaceTracking');

// Binding an object's property to a value provided by the face tracker

Scene.root.child('object0').transform.rotationY = FaceTracking.face(0).transform.rotationX;

// If you want to log objects, use the Diagnostics module.

var Diagnostics = require('Diagnostics');

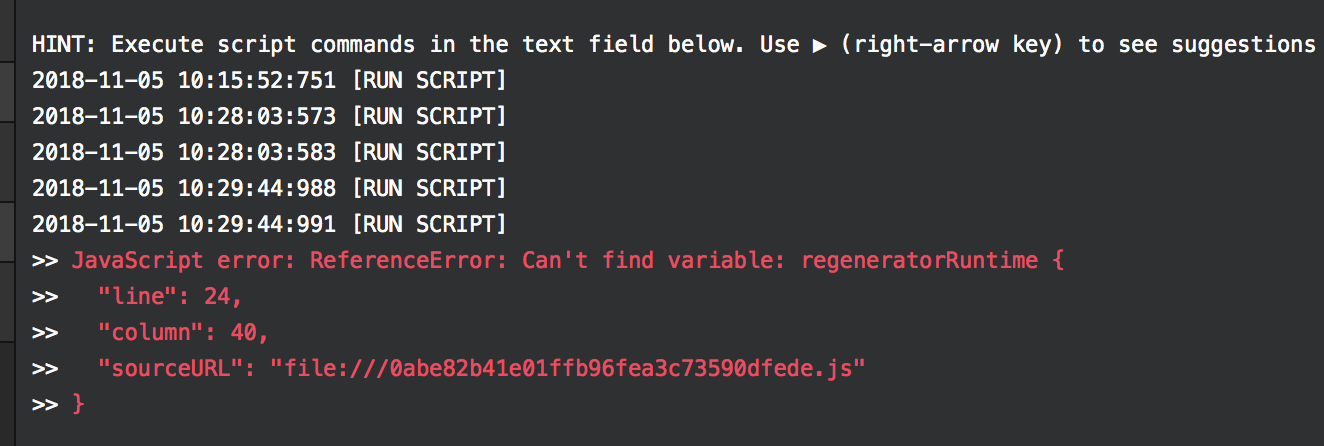

Diagnostics.log(Scene.root);It’s JavaScript! Actually it’s a slightly old version of JavaScript running in a very, very aggressive security sandbox — no network access, no open web. I tried fairly hard to inject Field’s live-coding engine into a running Spark AR app and was thwarted by the geniuses of Menlo Park at every turn. But like Field, Facebook has a hard time getting great error messages out of its JavaScript engine:

Ultimately Spark AR Studio and its apps are structured in very similar ways to how Field’s AR apps are structured on iOS — Javascript running in a web-browser that’s been embedded in a custom app. Here Facebooks app adds computer vision tracking support in excess of what’s offered by the OS (iOS’s built-in face-tracker only works on the front-facing camera / depth scanner on iPhone X and above).

Other than that, a lot of effort seems to have gone into the packaging and testing industrial workflows — how to bundle up your code, test it on multiple devices, compress it, upload it and distribute it. Even AR Studio’s own templates end up ‘too large’ to successfully export — mindful as Facebook is of data connections limits on the global market.

Precise control over the late stages of packaging and distribution also offers Facebook the ability (currently untapped) to inject advertising into the ‘scene’ — and, in the absence of physically connecting your phone to the development app, there’s no alternative to curated distribution through Facebook. So: Facebook / Spark AR — interesting for building 2D, 2.5D and ‘on face’ experiences and interesting for its approach to low-code creation. Otherwise, not really the full medium.

Unity

Unity has, slowly, over the last 5 years or so, become the 800-pound Gorilla of “Game Engines”. It can be downloaded free here but you do have to promise not to make more than $100k with it (a year). It’s available for Mac and Windows and lets you target any mainstream hardware / OS combination relevant to gaming today, including Android and iOS devices.

Crucially, perhaps because it is both central to a large swath of an industry that drives hardware sales and yet isn’t controlled by any one hardware or OS company, Unity has become an important ‘neutral zone’ for otherwise mutually antagonistic hardware and software companies to support. Both Apple and Google posted Unity code to access their latest AR frameworks immediately upon announcing them. Other than the web — the first and best hope for a functional neutral zone we have — nothing else seems to be able to fill this role right now. While there isn’t much inside Unity that’s conceptually or technically unique, or even particularly well engineered, Unity remains de jure and de facto central to app and ‘experience’ design. I’m told 80% of Sundance’s VR pieces last year ran on Unity.

I’ve made my prejudices against Unity (and, more strictly, against the classroom teaching of Unity) transparently clear, but let’s get to the point where we can build a scene in AR with it anyway. As my grandmother didn’t say: you’ll never be unemployed if you know Unity.

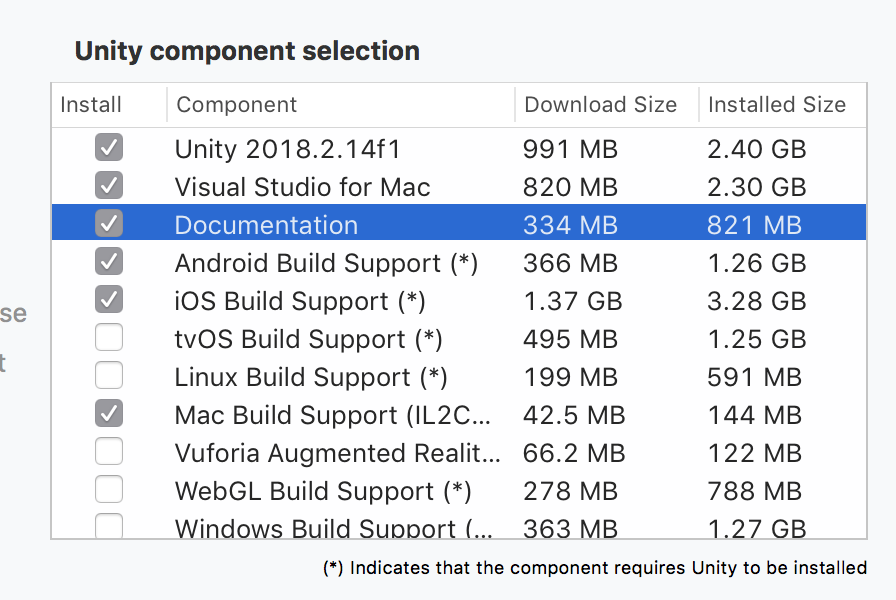

For installation, you’ll want to select something like this:

Unity after 13 years of growth is also colossal:

Expect 10 mins of downloading various things to install.

Almost actually doing some AR

First of all, while Unity is a neutral zone, it’s not a harmonious place. ARKit (Apple) and ARCore (Google) maintain separate and incompatible plugins. If you are making something for iOS you are not making it for Android and vice versa. You can plaster enough code between the competing plugins and your actual work that you might be able to do both, but that’s up to you. There’s an idea that once this all settles down AR will be something that’s built into Unity in the same way as, say, joystick input is unified around a common set of abstractions, but we’re not there yet.

So, let’s focus on the majority of the class: iPhones. First you need to download the [unity-arkit plugin]/(https://bitbucket.org/Unity-Technologies/unity-arkit-plugin/downloads/). Once you’ve downloaded and decompressed that you’ll want to dig into the depths of that folder and open:

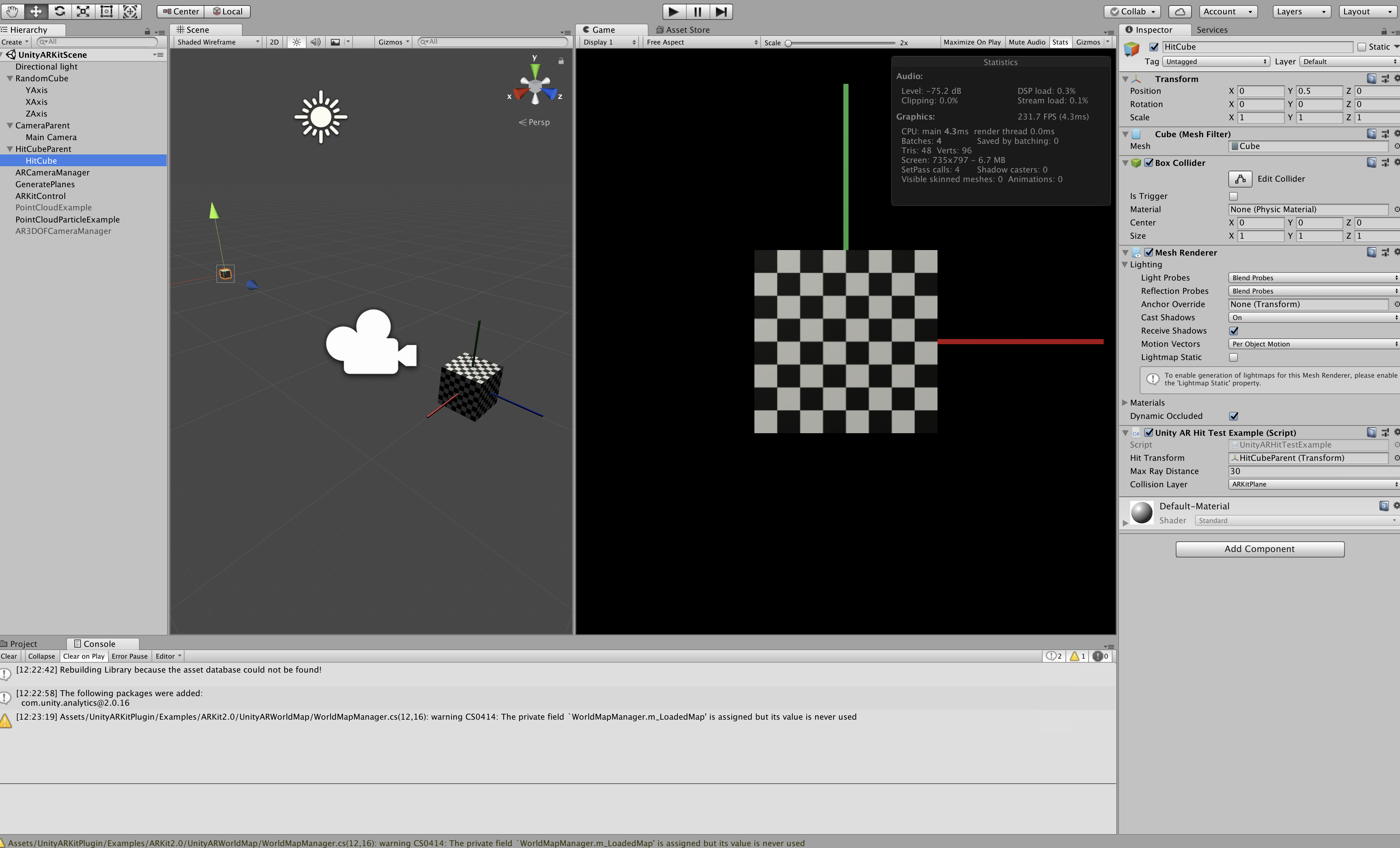

... Assets/UnityARKitPlugin/Examples/UnityARKitScene/UnityARKitScene.unity. You’ll get a couple of warnings there, which can be safely ignored (I think ?!). Eventually you’ll get to:

There’s a lot going on here, but, similar to the Spark AR Studio, you can map it onto our Editor / Field. The Scene-graph is top left; properties, materials, transforms are top right; assets (things used but not drawn) are at the bottom. Overlapping with that on another tab is, essentially, your code’s output window (the equivalent of ‘print’ in Field). The key difference in Unity then is that Scene Graph objects (called GameObject) corral, control and invoke code; where in Field, code produces and governs the Scene. In Unity if there isn’t any code then everything draws just fine — perhaps nothing happens?; in Field if there’s no code then the screen in blank.

Obviously these starting conditions are just the point of departure. But if you are looking for the actual code in Unity look for scripts attached to GameObjects in the scene:

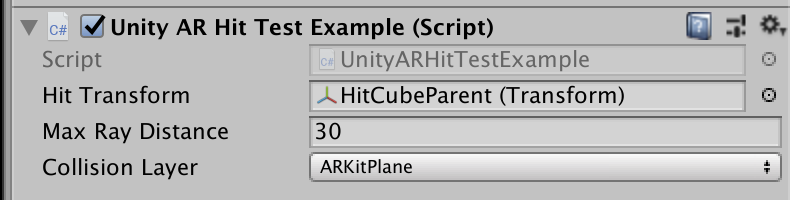

To survive this encounter you’ll have to be willing to engage a mode of seeing where you don’t look at the things that don’t seem to make sense (“Collision Layer”, “Max Ray Distance”? We have no way of knowing what these things are right now, maybe ever). Double click on the (apparently disabled?) script associated with the “HitCube” object called “UnityARHitTestExample” and you’ll get taken to the code in your OS’s code editor of choice:

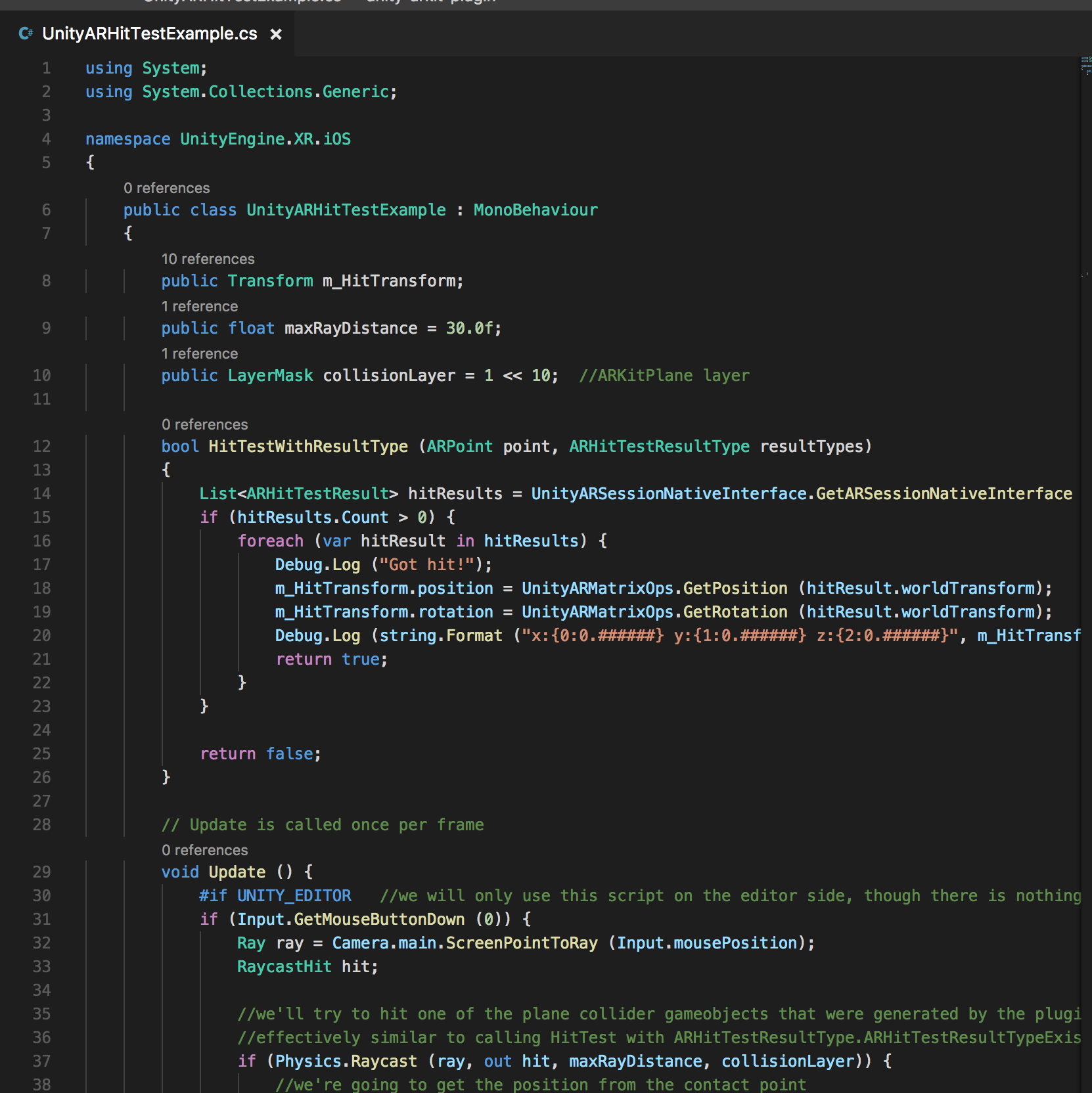

That’s the top half of script associated with the “HitCube” game object. There’s always something terrifying about two pages of somebody else’s code — some notes:

- The language is not Javascript but C# — which is a language semi-controlled by Microsoft that’s a little like Java and not much like Javascript. This code passes through at least two or three complete translations before it ends up on your Apple phone, very much not controlled by Microsoft.

- C# is suit-and-a-tie-wearing programming language. That you are kicked out of Unity’s editor into something likely made by Microsoft here is no accident — there are coders, there are asset creators, and there are people that link the two together; Unity embodies and maintains this split.

- The words and syntax are different, but the ideas are exactly the same: that

Updatedeclaration is Field’s_r— add some code to the main animation cycle. The life-cycle of aGameObjectis very similar to the life-cycle of a Box in Field. - The code in there is an event handler of sorts — looking for touch events and responding to them by doing intersection testing against a model of the world.

- Unity can actually place ARKit’s model of the world (the detected planes for example) into it’s scene-graph as hidden objects. This lets you reuse all of the machinery that is already in Unity for intersection testing geometry and physics. This is a neat and powerful trick — intersection testing is, after all, how you go about shooting things in a first person shooter.

- Alas there’s code inside this file for handling both mouse and touch events depending on whether we are running ‘inside the editor’ or ‘inside the phone’, this makes it harder to understand what’s going on. In Field those two code-paths are wired through to the same place in your code somewhere behind the scenes.

- Unity comes with a complex renderer that supports, among other things, various ways of rendering shadows and optical effects common in games. This complexity generally means that creating dynamic geometry is difficult. Think of the average computer game: almost all of the geometric complexity of a level is designed ahead of time. If you are looking for the part that builds planes in response detecting them in the world, you’ll find that part very hard to fathom when you find it — it cuts against the idea of a static scene carefully pre-made by professionals.

- This part of the code here:

public Transform m_HitTransform;

public float maxRayDistance = 30.0f;

public LayerMask collisionLayer = 1 << 10; //ARKitPlane layerBuilds part of the UI of the script editor automatically. Which is pretty handy, and an interesting relationship between code and interface. But again, this is the paradigm: there are people who write code, and then people who tweak the numbers.

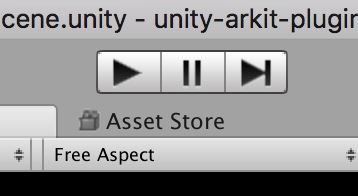

Let’s say we don’t run screaming and, instead, we want to launch this code somehow to experience some AR. We are certainly in the land of the play/stop/clear button:

Unfortunately this is where things get messy. We have two ways of doing this.

The first is to launch into an live App on the cell phone à la Field. Unfortunately the special app we need to use for AR development is not distributed through the App store, therefore you have to build it yourself. Building App’s for deployment on iPhones necessitates engaging with Apple’s developer tools (which are infamously complex) and Apple’s mobile developer device authorization system which, last I checked, cost $100 a year to sign up for. Without Apple’s permission even your own code on your own phone is impossible. This is what we’ve been doing behind the scenes for the iOS Field app.

The second option is worse: just do what you are going to end up doing in the end anyway, and build a complete App. All Unity will do for you here is set everything up and pass you off to Apple’s development tools. So you are in no better shape. Worse, builds take 1-2 minutes to complete every time you change the color of an object, or the scale on a box.

Furthermore, right now the first option just doesn’t work on iOS 12! If you’d like to pursue the second option I’m happy to try to take you through the Apple signup process during office hours. We’ll take a brief look at some features in Unity that certainly aren’t in Field (yet?) during class (like the 3d physics engine).

In short, getting about making AR experiences in Unity is a heavier lift than the situation for desktop or even console computer games — without being able to interact with code in close proximity to the actual medium that you are creating an experience for are you really creating for the medium? Unity makes a case for moving large, capable teams of console and PC game-makers closer to delivering AR work on phones; but AR itself poses particular difficulties for development that Unity doesn’t have the answers to yet.