The Person Detector

Prompted by Zach & Xander — Field support for loading object bounding boxes detected by a deep-learning object detector (specifically, here, I’m using a slightly modified Darknet implementation of YOLO2). Right now this works like the sound analysis works: you send me data (here, video) and I’ll send you an analysis package that Field can load. Here’s the code:

// build a layer with a video associated with it

var layer = _.stage.withImageSequence("/Users/marc/Desktop/zachAndXander/test1/src/")

// let's just put the image over the whole Stage

var f = new FLine().rect(0,0,100,100)

f.filled=true

f.color=vec(1,1,1,1)

layer.bakeTexture(f)

layer.lines.f = f

// this, as before, controls the time of the video

layer.time=0.1That will give you a still from a video (and you ought to be able to figure out how to animate layer.time to get the video to play)

Now lets add the analysis data to it:

// secret incantation to import the PersonDetector

var PersonDetector = Java.type("trace.util.PersonDetector")

// load the data file I have given you

// you have to give the dimensions of the original video file

var pd = new PersonDetector("/Users/marc/Desktop/zachAndXander/test1_run_yolo", 1280, 720)

// let's use a new layer

var annotations = _.stage.withName("annotations")

// and draw all of the bounding rectangles at time `layer.time`

annotations.lines.clear()

for(var rect of pd.rectsAt(layer.time))

{

var r = new FLine().rect(rect)

r.filled=true

r.color=vec(1,1,1,0.5)

annotations.lines.add(r)

}The new code here is pd.rectsAt(layer.time) which gives you a list of Rect that give you the position (in standard Stage 0-100 coordinates) of all of the found people. Rect is a structure with x, y, w and h.

This is fairly representative of state of the art a year or so ago — people come and go occasionally, and pairs of people can pose problems — and very representative of what the noise on raw tracking data of any kind looks like (from video detectors through pitch detectors in the sonic domain).

Advanced interface

Sometimes knowing what rectangles are where at a given frame isn’t enough: if you want to structure your code around longer term patterns, you need to know how a particular rectangle moves over time. This isn’t information that the tracker provides directly (it merely looks frame-by-frame at the video), but it is information that we can have the computer try to infer. To get closer to this structure, two features:

pd.rectsAt(layer.time)returns a list ofRects but, in addition tox,y,w,hthere’s also anamefield. This name will be something liketrack57ortrack102. Rects with the same name come from the same ‘track’ — a continuously tracked rectangle.pd.tracksAtTime(layer.time)returns a list ofTracks that contains how aRectmoves over time range betweentrack.startTime()andtrack.endTime(). You can get the (smoothly interpolated)Rectat timetby callingtrack.rectAtTime(t).

For example this code here, shows the current bounding boxes and their tracks:

var PersonDetector = Java.type("trace.util.PersonDetector")

// build a layer with a video associated with it

var layer = _.stage.withImageSequence("/Users/marc/Desktop/zachAndXander/test1/src/")

var pd = new PersonDetector("/Users/marc/Desktop/zachAndXander/test1_run_yolo", 1280, 720)

var f = new FLine().rect(0,0,100,100)

f.filled=true

f.color=vec(1,1,1,1)

layer.bakeTexture(f)

layer.lines.f = f

while(true)

for(var t of Anim.lineRange(0, 1, 10000))

{

_.stage.frame()

layer.time=t

var annotations = _.stage.withName("annotations")

annotations.lines.clear()

// get all tracks that belong to rectangles at this time

for(var track of pd.tracksAt(layer.time))

{

var rect = track.rectAtTime(layer.time)

var r = new FLine().rect(rect)

r.filled=true

r.color=vec(1,1,1,0.5)

annotations.lines.add(r)

// trace the midpoint of each rectangle over time

var ff = new FLine()

// take 200 samples over the whole course of the track

for(var q of Anim.lineRange(track.startTime(), track.endTime(), 200))

{

re = track.rectAtTime(q)

// line to the midpoint of this rect

ff.lineTo(re.x+re.w/2, re.y+re.h/2)

}

ff.fastThicken = 0.5

ff.color=vec(1,1,0,1)

annotations.lines.add(ff)

}

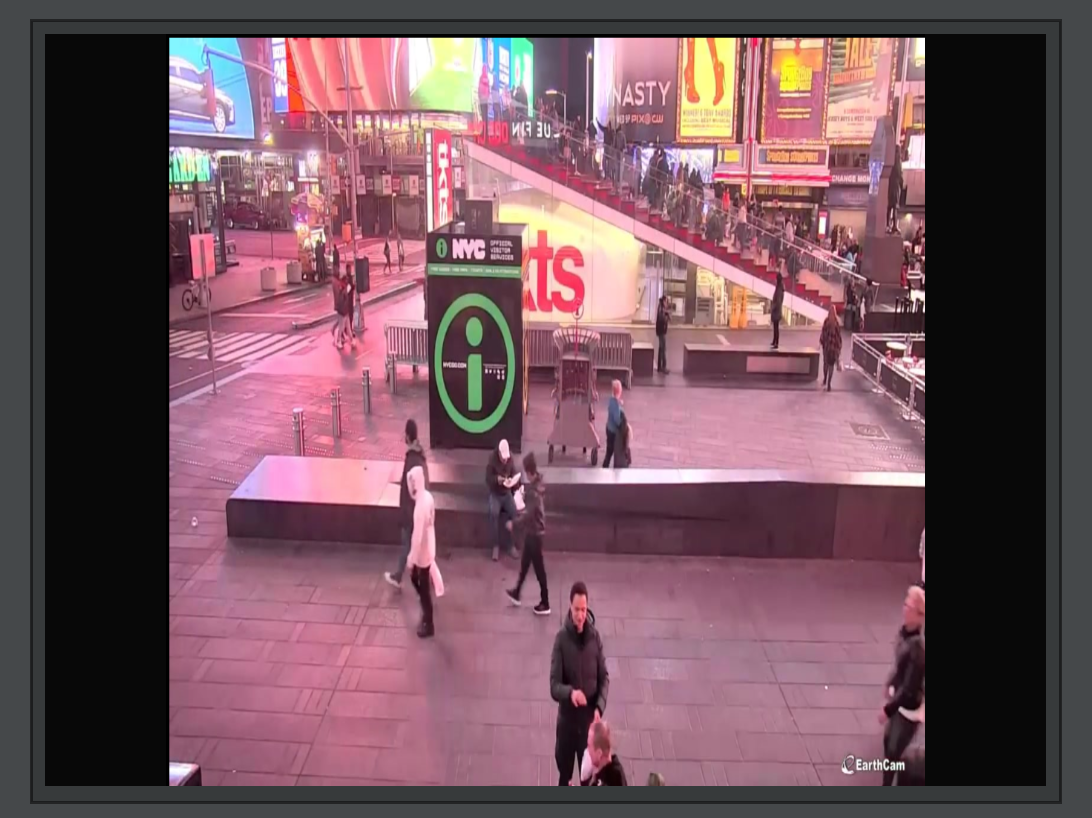

}Yielding the longer term structure of the person detector: